Embedded Touchscreen

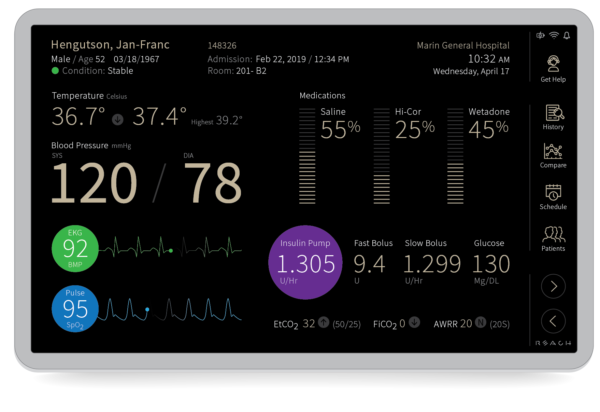

Engineers tell us that getting an Embedded touchscreen up and running from scratch is time-consuming and expensive. There is a steep learning curve, it takes their focus away from their core competencies, and little changes cause big problems related to maintaining the solution over time. The risk of higher development costs, missed production targets, and maintenance headaches cause them to look for something other than a homegrown solution. So they turn to Reach Technology embedded touchscreen modules.

Compare Embedded GUIs

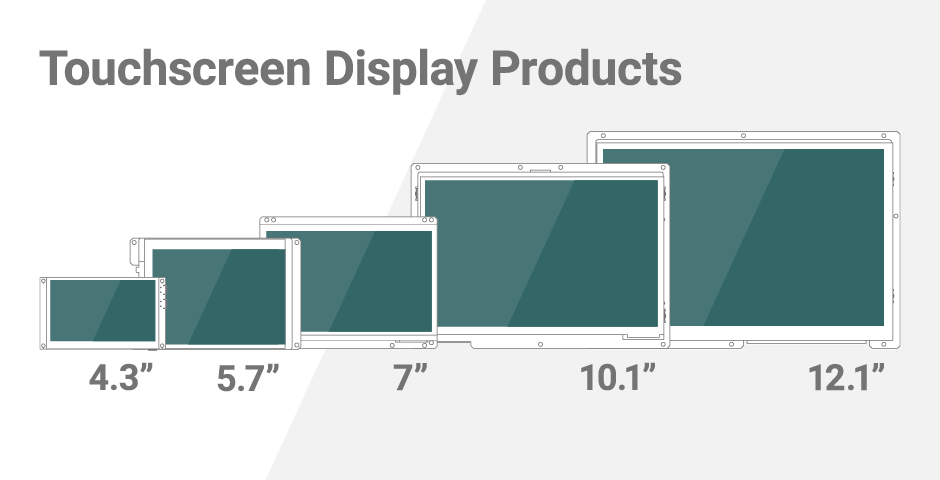

Your choice of sizes (4.3″, 5.7″, 7″, 10.1″ and 12.1″), I/O, processor, memory and touch type. Compare Products

Choose Between Two Development Environments

What You Get When You Work with Us

Get Started with a Touchscreen Development Kit

3,500+ kits sold to date. 100% Satisfaction Guarantee.

Why separate Embedded GUI design and real-time processing?

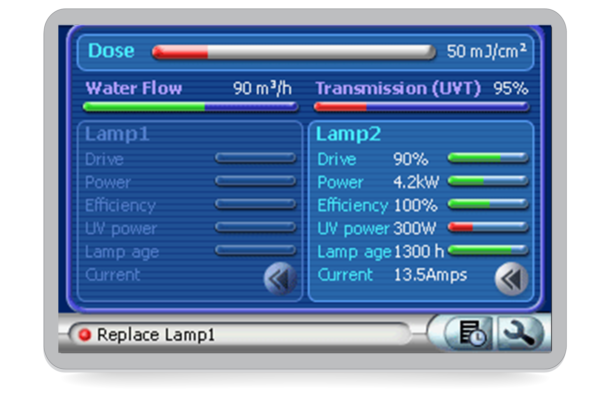

Using one processor for both real-time and embedded GUI functions seems less costly and conceptually simpler, but it doesn’t work out in practice. Embedded programmers are comfortable designing and implementing core functionality in application-specific microcontrollers, not in complex, multi-tasking GUI hosts. In our experience, it makes sense to keep real-time and GUI code on separate processors. GUI design needs to be flexible, so you can change screen sizes, display and touch technology, and so forth without impacting proven critical-to-function embedded code. Best of all, it is far more testable!

Full Embedded GUI Transcript

I want to explain as best I can why you want to separate the embedded GUI part, the real guts of a product from the graphical interface part, what we call the GUI. If you think about a piece of embedded industrial or medical equipment, you are going to have a core function. It’s like a loop. You are constantly doing something, and this could be in a skin laser. You measure temperatures. You are doing the laser pulses. Whatever you are doing, that is the main function of the piece of equipment.

We have companies that do vegetable sorting, fruit sorting, so they have multiple channels. They are looking at blueberries come through and deciding which ones to get rid of because they are off-color. This is sort of the core functionality of your product, and while it is doing whatever it’s doing, there are inputs coming in from the outside world. You have to react to different things. This is the core of functionality. Within that, you are going to find there are certain times where what the core function does is very critical. If it doesn’t do this at the right time – let’s say there is a little part in here where it has to react to something coming in, and it has to react to it exactly going out – if it doesn’t do this thing, it is going to affect the functionality and the product. You have to write this in a way that works. The person writing the core, it is very low-level functionality. The thing is, it is hard to test, it’s hard to get right. Once you have the core functionality right, you sort of want to leave it alone. The problem is if I have a GUI, a graphical interface with buttons, and dials, and whatever, I have this thing, and it’s running on the same processor, how can I make sure that this is always going to work right, independent of what I’m doing on the GUI. The only way to do it is to put this part … wait until it is exactly in the critical function and then do all your knobs, and buttons, and playing around with it. That is very hard to do. It is complicated.

Essentially having the GUI run on the same platform as the embedded control component, makes testability very, very difficult. You can never be entirely sure that you have all the cases. The classic example is the Windows operating system. Anybody running Windows has had the machine either freeze or crash on them, and it’s because of the complex interaction of all the different drivers, programs, things you are running, things in the background, networking. It is all going on at the same time, and you simply cannot test all the combinations to know that you are going to cover all the cases, and the machines are different.

The long story is, the more you have going on at the same time on the same piece of hardware, the harder it is to test. If I am doing an embedded product, I never want to have the thing stutter, or it looks like it does not work, and you don’t know why. You want to test the thing, and the only way to test decently is to divide and conquer. You have to put a wall between these two. Let the embedded engineer who is doing the low-level programming to this and test it. This way you have a well-defined interface between the two, between the graphical interface and the core function.

This is typically done in a microcontroller, written in assembler, whatever. You don’t really care, but this is done by an embedded engineer who knows how to write this stuff, who knows the IO, who knows the hardware. They don’t want to have to know the GUI stuff. They just want to design a well-defined interface, and you can test. This could be called an API or it’s just called an interface, API being application programming interface. It is a well-defined interface. You can define it. You can write a test program for it, and then when this thing does what it’s supposed to do – given these inputs and outputs – this is a nice little thing. It doesn’t have to change if you change the GUI. That is key. It also is master of its own domain so when it decides to accept inputs from the GUI or asks for things from the GUI, it is at a time when it knows it’s okay. It is not reacting. It is not in a mode like it’s firing a laser at somebody’s skin. It knows, now I am in control of when I am doing the IO this side from the GUI.

What I am trying to get across – is that the idea of having one processor, do both the real-time stuff, where the critical to function stuff … It doesn’t have to be real-time, and there are millions of definitions of real-time. Doing it all in one processor, even though it’s conceptually simple, it is conceptually less cost from a maintainability, from a divide and conquer, get it done, from a risk perspective, it is not a good idea. It is much better to divide and conquer because of the domain-specific knowledge of the embedded engineer doing the low-level stuff, and certainly the domain knowledge of this are different. They are significantly different. In the kind of industrial and medical products that we work with, the cost of the microcontroller – $5 or $2 whatever – it’s not a giant cost. The cost is probably more in developing the interaction, but there is a lot of good reasons to have this layer here. In protocols, they have layers all the time, and it’s to simply things, just to make things independently testable. Same thing here. This is a better way to do it.

Embedded Touchscreens Made Easy:

Up in Days, Smoothly to Production. Get started with a Development Kit.

Reach Technology is now a part of Novanta.

Sales and Engineering

545 First Street

Lake Oswego, OR 97034

503-675-6464

sales@reachtech.com

techsupport@reachtech.com

Manufacturing

4600 Campus Place

Mukilteo, WA 98275

service@reachtech.com

Please send payments to:

Novanta Corporation

PO Box 15905

Chicago, IL 60693

accounts.receivable

@novanta.com